FLAIR

Artificial Intelligence challenges organised around geo-data and deep learning

Project maintained by IGNF

FLAIR #2 : textural and temporal information for semantic segmentation from multi-source optical imagery 🌍🌱🏠🌳➡️🛩️🛰️

Challenge organized by IGN with the support of the CNES and Connect by CNES with the Copernicus / FPCUP projetc.

FLAIR #2 datapaper 📑 : https://arxiv.org/pdf/2305.14467.pdf

FLAIR #2 NeurIPS datapaper 📑 : https://proceedings.neurips.cc/paper_files/paper/2023/file/353ca88f722cdd0c481b999428ae113a-Paper-Datasets_and_Benchmarks.pdf

FLAIR #2 NeurIPS poster 📑 : https://neurips.cc/media/PosterPDFs/NeurIPS%202023/73621.png?t=1699528363.252194

FLAIR #2 repository 📁 : https://github.com/IGNF/FLAIR-2

FLAIR #2 challenge page 💻 : https://codalab.lisn.upsaclay.fr/competitions/13447 [closed]

Pre-trained models ⚡ : for now upon request !

▶️ Context of the challenge (click to expand)

With this new challenge, participants will be tasked with developing innovative solutions that can effectively harness the textural information from single date aerial imagery and temporal/spectral information from Sentinel-2 satellite time series to enhance semantic segmentation, domain adaptation, and transfer learning. Your solutions should address the challenges of reconciling differing acquisition times, spatial resolutions, accommodating varying conditions, and handling the heterogeneity of semantic classes across different locations.

▶️ Dataset description (click to expand)

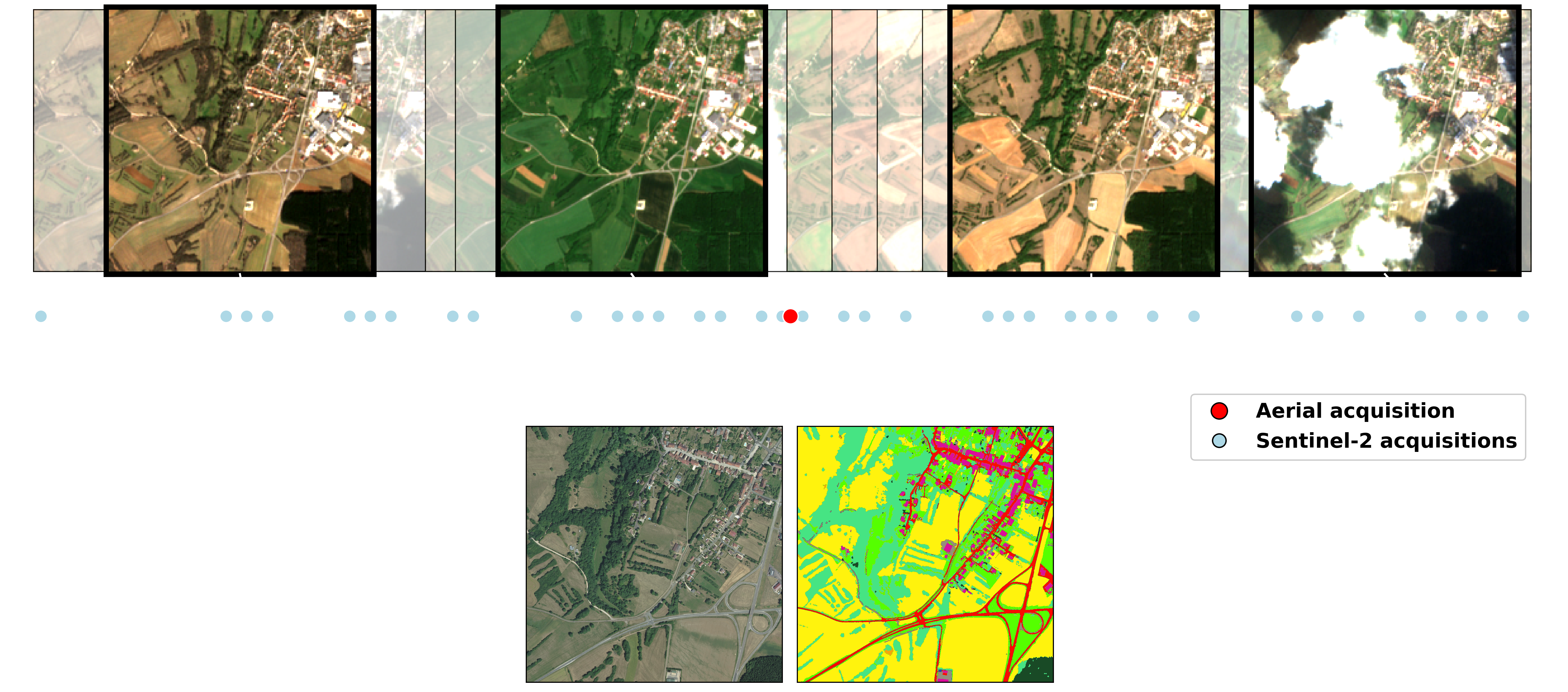

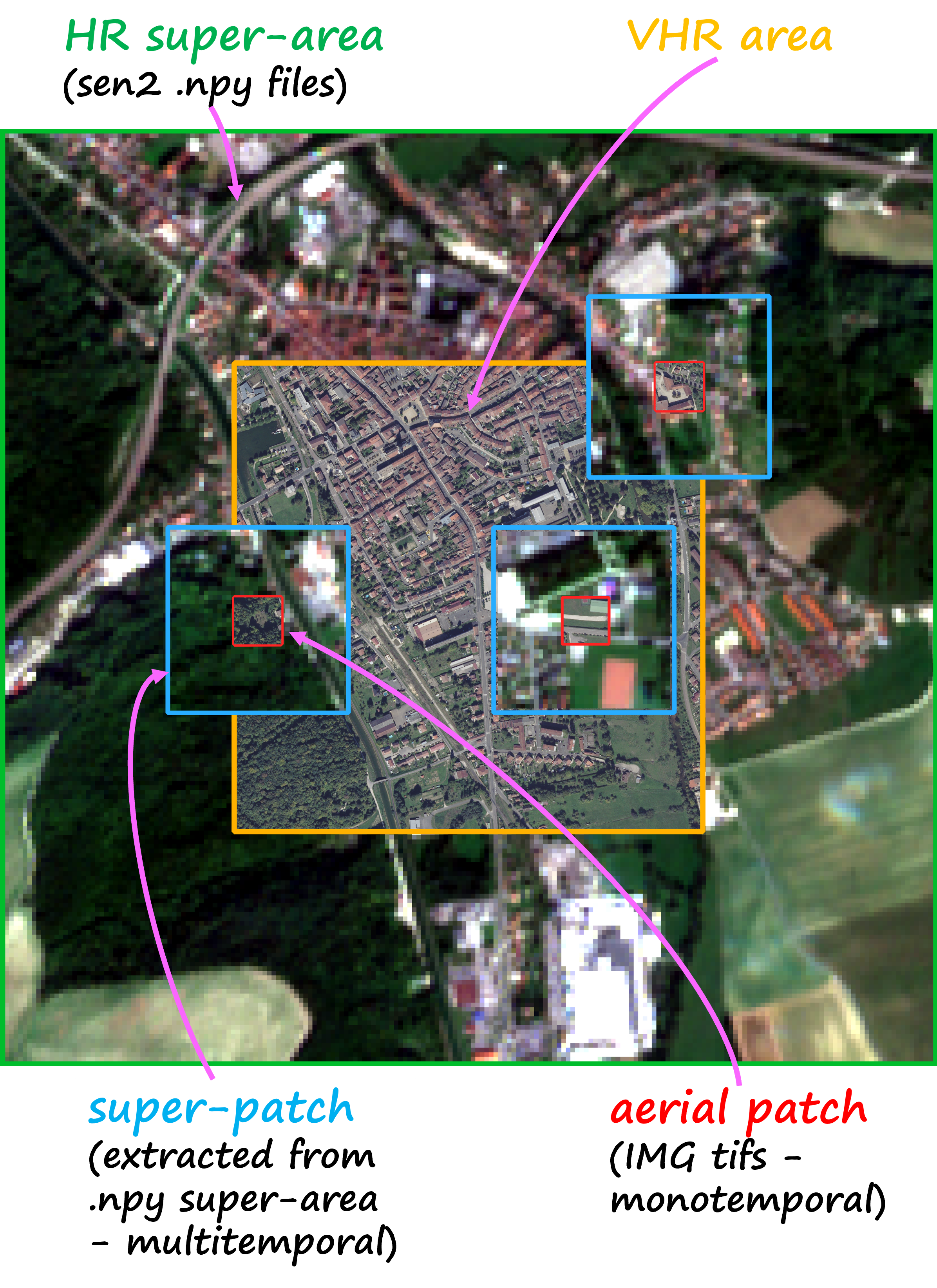

The FLAIR #2 dataset encompasses 20,384,841,728 annotated pixels at a spatial resolution of 0.20 m from aerial imagery, divided into 77,762 patches of size 512x512. The FLAIR #2 dataset also includes an extensive collection of satellite data, with a total of 51,244 acquisitions of Copernicus Sentinel-2 satellite images. For each area, a comprehensive one-year record of acquisitions has been gathered offering valuable insights into the spatio-temporal dynamics and spectral characteristics of the land cover. Due to the significant difference in spatial resolution between aerial imagery and satellite data, the areas initially defined lack sufficient context as they consist of only a few Sentinel-2 pixels. To address this, a buffer was applied to create larger areas known as super-areas. This ensures that each patch of the dataset is associated with a sufficiently sized super-patch of Sentinel-2 data, providing a minimum level of context from the satellite.

The dataset covers 50 spatial domains, encompassing 916 areas spanning 817 km². With 13 semantic classes (plus 6 not used in this challenge), this dataset provides a robust foundation for advancing land cover mapping techniques.

| Class | Value | Freq.-train (%) | Freq.-test (%) | |

|---|---|---|---|---|

| building | 1 | 8.14 | 3.26 | |

| pervious surface | 2 | 8.25 | 3.82 | |

| impervious surface | 3 | 13.72 | 5.87 | |

| bare soil | 4 | 3.47 | 1.6 | |

| water | 5 | 4.88 | 3.17 | |

| coniferous | 6 | 2.74 | 10.24 | |

| deciduous | 7 | 15.38 | 24.79 | |

| brushwood | 8 | 6.95 | 3.81 | |

| vineyard | 9 | 3.13 | 2.55 | |

| herbaceous vegetation | 10 | 17.84 | 19.76 | |

| agricultural land | 11 | 10.98 | 18.19 | |

| plowed land | 12 | 3.88 | 1.81 | |

| swimming pool | 13 | 0.01 | 0.02 | |

| snow | 14 | 0.15 | - | |

| clear cut | 15 | 0.15 | 0.82 | |

| mixed | 16 | 0.05 | 0.12 | |

| ligneous | 17 | 0.01 | - | |

| greenhouse | 18 | 0.12 | 0.15 | |

| other | 19 | 0.14 | 0.04 |

▶️ Baseline model: U-T&T (click to expand)

We propose the U-T&T model, a two-branch architecture that combines spatial and temporal information from very high-resolution aerial images and high-resolution satellite images into a single output. The U-Net architecture is employed for the spatial/texture branch, using a ResNet34 backbone model pre-trained on ImageNet. For the spatio-temporal branch, the U-TAE architecture incorporates a Temporal self-Attention Encoder (TAE) to explore the spatial and temporal characteristics of the Sentinel-2 time series data, applying attention masks at different resolutions during decoding. This model allows for the fusion of learned information from both sources, enhancing the representation of mono-date and time series data.

▶️ Download the dataset (click to expand)

| Data | Size | Type | Link |

|---|---|---|---|

| Aerial images - train | 50.7 Go | .zip | download |

| Aerial images - test | 13.4 Go | .zip | download |

| Sentinel-2 images - train | 22.8 Go | .zip | download |

| Sentinel-2 images - test | 6 Go | .zip | download |

| Labels - train | 485 Mo | .zip | download |

| Labels - test | 108 Mo | .zip | download |

| Aerial metadata | 16.1 Mo | .json | download |

| Aerial <-> Sentinel-2 matching dict | 16.1 Mo | .json | download |

| Satellite shapes | 392 Ko | .gpkg | download |

| Toy dataset (subset of train and test) | 1.6 Go | .zip | download |

Alternatively, get the dataset from our HuggingFace Page.

Reference

Please include a citation to the following paper if you use the FLAIR #2 dataset:

Plain text:

Anatol Garioud, Nicolas Gonthier, Loic Landrieu, Apolline De Wit, Marion Valette, Marc Poupée, Sébastien Giordano and Boris Wattrelos. 2023.

FLAIR: a Country-Scale Land Cover Semantic Segmentation Dataset From Multi-Source Optical Imagery. (2023).

In proceedings of Advances in Neural Information Processing Systems (NeurIPS) 2023.

DOI: https://doi.org/10.48550/arXiv.2310.13336

BibTex:

@inproceedings{ign2023flair2,

title={FLAIR: a Country-Scale Land Cover Semantic Segmentation Dataset From Multi-Source Optical Imagery},

author={Anatol Garioud and Nicolas Gonthier and Loic Landrieu and Apolline De Wit and Marion Valette and Marc Poupée and Sébastien Giordano and Boris Wattrelos},

year={2023},

booktitle={Advances in Neural Information Processing Systems (NeurIPS) 2023},

doi={https://doi.org/10.48550/arXiv.2310.13336},

}